Data Leadership in Practice: Building the AI-Ready Lakehouse - Part 2

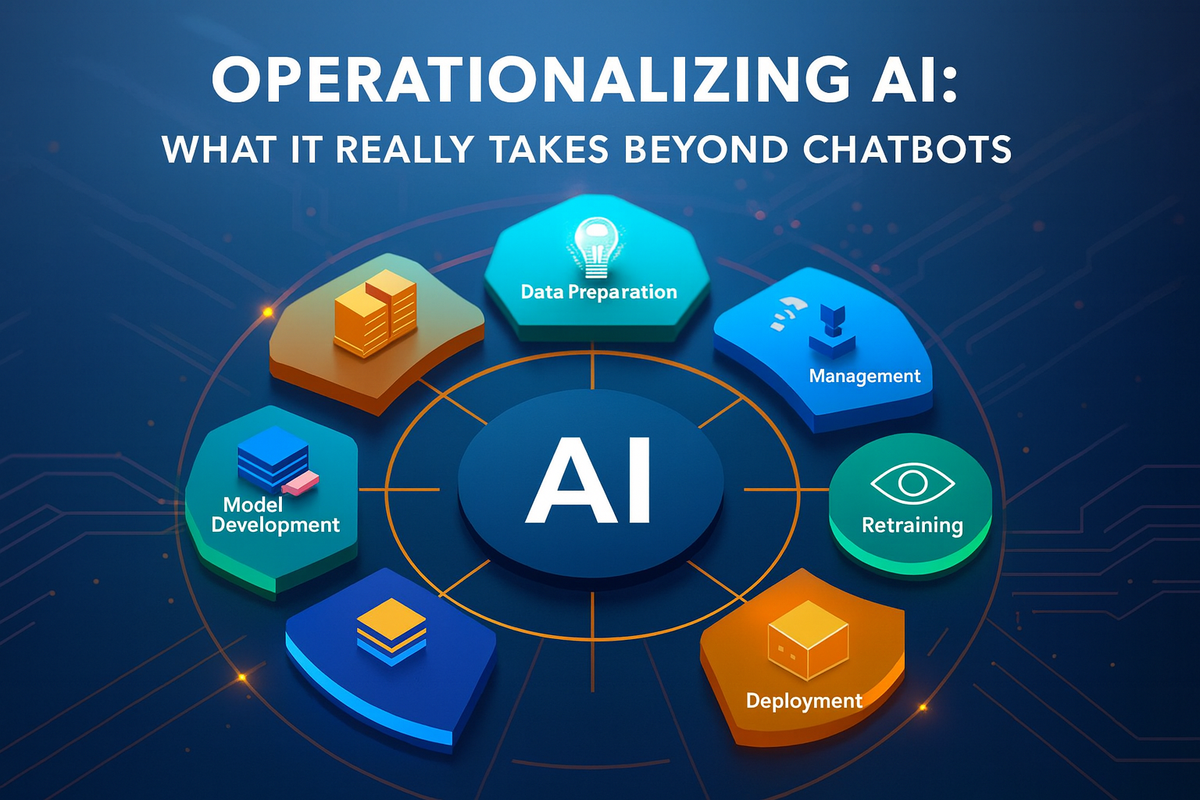

Operationalizing AI: What It Really Takes Beyond Chatbots

TL;DR

Transitioning from traditional analytics to production-grade machine learning systems is not just a technological upgrade but a strategic imperative. This shift enables organizations to move beyond descriptive and diagnostic analysis to predictive and prescriptive insights, driving tangible business outcomes and competitive advantages.

Why This Matters to the Business

-

Return on Investment (ROI): Implementing advanced machine learning systems can significantly enhance decision-making processes, leading to better resource allocation, cost savings, and increased revenue. By leveraging AI, businesses can automate complex tasks, reduce operational inefficiencies, and unlock new revenue streams through data-driven insights.

-

Regulatory Compliance: As regulatory environments become increasingly stringent, having robust AI systems in place ensures that your organization can comply with data privacy and security regulations. This not only mitigates the risk of non-compliance penalties but also builds trust with customers and stakeholders.

-

Total Cost of Ownership (TCO): While the initial investment in AI and machine learning infrastructure may seem substantial, the long-term benefits often outweigh the costs. Operational efficiencies, reduced downtime, and improved accuracy in decision-making processes contribute to a lower TCO over time. Additionally, leveraging cloud-based solutions and open-source tools can further optimize costs.

-

Competitive Advantage: Organizations that successfully operationalize AI can gain a significant edge over competitors. The ability to predict market trends, personalize customer interactions, and optimize supply chains can set your business apart in a crowded marketplace.

-

Scalability and Flexibility: Modern AI systems are designed to scale with your business needs. Whether it's handling increased data volumes or adapting to new market conditions, these systems provide the flexibility to grow and evolve with your organization.

-

Risk Management: Advanced AI systems can identify potential risks and anomalies in real-time, allowing for proactive measures to mitigate issues before they escalate. This capability is crucial for maintaining business continuity and safeguarding against operational disruptions.

By focusing on these strategic priorities, businesses can ensure that their investment in AI and machine learning not only drives technological advancement but also aligns with broader business objectives, ultimately contributing to sustained growth and success.

The Journey from Analytics to AI

Traditional analytics focuses on descriptive and diagnostic analysis, providing insights into what has happened and why. AI, on the other hand, enables predictive and prescriptive analysis, providing insights into what will happen and what actions should be taken. Transitioning from analytics to AI involves several steps, including data preparation, model development, model deployment, and model management.

Data Preparation

Data preparation is the process of cleaning, transforming, and organizing raw data into a format suitable for analysis and modeling. This process is crucial for ensuring data quality and consistency, which are essential for building accurate and reliable AI models.

Data Cleaning

Data cleaning involves identifying and correcting errors, inconsistencies, and inaccuracies in the data. This process may include removing duplicate records, correcting misspellings, and handling missing values.

Data Transformation

Data transformation involves converting data from one format or structure into another. This process may include normalizing data, aggregating data, and encoding categorical variables.

Data Organization

Data organization involves structuring and formatting data in a way that facilitates analysis and modeling. This process may include creating tables, defining relationships, and indexing data.

Model Development

Model development is the process of building and training machine learning models. This process involves selecting appropriate algorithms, tuning hyperparameters, and evaluating model performance.

Algorithm Selection

Algorithm selection involves choosing the right machine learning algorithm for the task at hand. This choice depends on various factors, including the nature of the data, the complexity of the problem, and the desired outcome.

Hyperparameter Tuning

Hyperparameter tuning involves adjusting the parameters of the machine learning algorithm to optimize model performance. This process may include techniques such as grid search, random search, and Bayesian optimization.

Model Evaluation

Model evaluation involves assessing the performance of the machine learning model using appropriate metrics and techniques. This process may include techniques such as cross-validation, holdout validation, and bootstrapping.

Model Deployment

Model deployment is the process of integrating the machine learning model into the production environment. This process involves creating APIs, building user interfaces, and ensuring scalability and reliability.

API Creation

API creation involves developing application programming interfaces (APIs) that enable the machine learning model to interact with other systems and applications. This process may include techniques such as RESTful API design, GraphQL, and gRPC.

User Interface Development

User interface development involves building user interfaces that enable users to interact with the machine learning model. This process may include techniques such as web development, mobile development, and desktop development.

Scalability and Reliability

Scalability and reliability involve ensuring that the machine learning model can handle increasing volumes of data and requests without disrupting existing systems. This process may include techniques such as load balancing, auto-scaling, and fault tolerance.

Model Management

Model management is the process of monitoring, maintaining, and updating the machine learning model. This process involves tracking model performance, detecting drift, and retraining the model as needed.

Performance Tracking

Performance tracking involves monitoring the performance of the machine learning model using appropriate metrics and techniques. This process may include techniques such as logging, dashboards, and alerts.

Drift Detection

Drift detection involves identifying changes in the data or the environment that may affect the performance of the machine learning model. This process may include techniques such as statistical process control, change point detection, and anomaly detection.

Model Retraining

Model retraining involves updating the machine learning model with new data to ensure that it remains accurate and reliable. This process may include techniques such as online learning, transfer learning, and continuous integration/continuous deployment (CI/CD).

Integrating ML Tooling

To operationalize AI effectively, enterprises need to integrate a suite of ML tools that support the entire lifecycle of machine learning models:

Figure: Operational AI Lifecycle with Tooling Integration – This diagram illustrates how tools like MLflow, Vertex Pipelines, Amazon SageMaker, Feast, and Vertex AI align to the stages of data preparation, development, deployment, monitoring, and retraining in enterprise AI systems.

MLflow, Vertex AI, Amazon SageMaker

These platforms provide comprehensive environments for developing, training, and deploying machine learning models. They offer capabilities for experiment tracking, model management, and deployment, ensuring that models can be efficiently transitioned from development to production.

MLflow

MLflow is an open-source platform for managing the end-to-end machine learning lifecycle. It provides tools for experiment tracking, model packaging, and model deployment.

| Feature | Description |

|---|---|

| Experiment Tracking | MLflow provides tools for tracking experiments, enabling data teams to log parameters, metrics, and artifacts. This tracking improves reproducibility and simplifies model management. |

| Model Packaging | MLflow provides tools for packaging models, enabling data teams to create reusable and shareable model artifacts. This packaging improves operational efficiency and simplifies model deployment. |

| Model Deployment | MLflow provides tools for deploying models, enabling data teams to integrate models into production environments. This deployment improves scalability and reliability. |

Vertex AI

Vertex AI is a unified machine learning platform provided by Google Cloud. It offers a range of tools for building, deploying, and managing machine learning models, including AutoML for automated model training and Vertex Pipelines for workflow orchestration.

| Feature | Description |

|---|---|

| AutoML | Vertex AI provides AutoML, enabling data teams to automate the process of model training. This automation improves operational efficiency and simplifies model development. |

| Vertex Pipelines | Vertex AI provides Vertex Pipelines, enabling data teams to orchestrate workflows for model development and deployment. This orchestration improves operational efficiency and simplifies model management. |

| Integration | Vertex AI integrates with a wide range of Google Cloud services, including BigQuery, Cloud Storage, and Kubernetes. This integration enables enterprises to leverage their existing Google Cloud investments and tools. |

Amazon SageMaker

Amazon Amazon SageMaker is a fully managed machine learning service provided by AWS. It provides a range of tools for building, training, and deploying machine learning models, including built-in algorithms, Jupyter notebooks for interactive development, and Amazon SageMaker Pipelines for workflow orchestration.

| Feature | Description |

|---|---|

| Built-In Algorithms | Amazon SageMaker provides built-in algorithms, enabling data teams to leverage pre-built models for common tasks. This capability improves operational efficiency and simplifies model development. |

| Jupyter Notebooks | Amazon SageMaker provides Jupyter notebooks, enabling data teams to develop and test models interactively. This capability improves collaboration and simplifies model development. |

| Amazon SageMaker Pipelines | Amazon SageMaker provides Amazon SageMaker Pipelines, enabling data teams to orchestrate workflows for model development and deployment. This orchestration improves operational efficiency and simplifies model management. |

Feast (Feature Stores)

Feature stores are crucial for managing and serving machine learning features consistently across different models and applications. They ensure that features are readily available, reducing the time and effort required for feature engineering.

Feast

Feast is an open-source feature store for machine learning. It provides a centralized repository for storing and serving features, ensuring consistency and reducing duplication. Feast integrates with various data sources and provides capabilities for feature discovery, versioning, and monitoring.

| Feature | Description |

|---|---|

| Centralized Repository | Feast provides a centralized repository for storing and serving features, ensuring consistency and reducing duplication. This centralization improves operational efficiency and simplifies feature management. |

| Feature Discovery | Feast provides capabilities for feature discovery, enabling data teams to discover and reuse features. This discovery improves collaboration and simplifies feature engineering. |

| Feature Versioning | Feast provides capabilities for feature versioning, enabling data teams to track changes to features over time. This versioning improves data governance and simplifies feature management. |

Real-World Use Cases

The application of operationalized AI spans various domains, including:

Fraud Detection

AI models can analyze transactional data in real-time to identify fraudulent activities, enabling enterprises to take immediate action and mitigate risks.

Example

For example, a credit card company can use AI models to analyze transaction data and detect anomalies that may indicate fraudulent activities. The models can be trained on historical transaction data to identify patterns and behaviors associated with fraud. Real-time transaction data can be ingested and analyzed using streaming technologies, and the models can generate alerts for suspicious transactions. These alerts can be sent to the fraud detection team for further investigation and action.

Recommendation Systems

By leveraging user behavior data, AI-driven recommendation systems can provide personalized suggestions, enhancing user engagement and satisfaction.

Example

For instance, an e-commerce platform can use AI models to analyze user behavior data, such as browsing history, purchase history, and search queries, to generate personalized product recommendations. The models can be trained on historical user behavior data to identify patterns and preferences. Real-time user behavior data can be ingested and analyzed using streaming technologies, and the models can generate recommendations that are displayed to the user in real-time. This enhances the user experience and increases the likelihood of conversion.

Anomaly Detection

AI models can detect anomalies in operational data, helping enterprises identify potential issues before they escalate into significant problems.

Example

For example, a manufacturing company can use AI models to analyze sensor data from production equipment to detect anomalies that may indicate potential issues. The models can be trained on historical sensor data to identify normal operating conditions and patterns. Real-time sensor data can be ingested and analyzed using streaming technologies, and the models can generate alerts for anomalies that deviate from normal operating conditions. These alerts can be sent to the maintenance team for further investigation and action, helping to prevent equipment failures and minimize downtime.

Building CI/CD for Models

Establishing Continuous Integration and Continuous Deployment (CI/CD) pipelines for machine learning models is essential for maintaining their performance and relevance over time. This involves:

Versioning

Keeping track of different versions of models to ensure that changes can be rolled back if necessary.

Example

For example, a financial institution may have multiple versions of a credit scoring model. Each version may be trained on different datasets or use different algorithms. By keeping track of different versions, the institution can compare the performance of different models and roll back to a previous version if necessary.

Drift Monitoring

Continuously monitoring model performance to detect any degradation or drift, which may indicate that the model needs to be retrained.

Example

For instance, a retail organization may have a demand forecasting model that is used to predict sales for different products. Over time, the model's performance may degrade due to changes in consumer behavior or market conditions. By continuously monitoring the model's performance, the organization can detect any degradation or drift and retrain the model using the latest data.

Continuous Retraining Pipelines

Automating the retraining process to ensure that models are updated with the latest data and remain accurate and effective.

Example

For example, a healthcare organization may have a predictive model that is used to identify patients at risk of developing chronic conditions. The model may be trained on historical patient data, including demographic information, medical history, and lifestyle factors. By automating the retraining process, the organization can ensure that the model is updated with the latest patient data and remains accurate and effective in identifying at-risk patients.

Evaluating AI Vendors

When evaluating AI vendors, it is important to consider several factors, including the vendor's expertise, the quality of their models, and their ability to integrate with existing systems. Additionally, it is important to consider the vendor's approach to data privacy and security, as well as their ability to provide ongoing support and maintenance.

Expertise

The vendor's expertise in the specific domain or application area is crucial for ensuring that the AI models are accurate and effective. It is important to evaluate the vendor's experience and track record in the relevant field.

Model Quality

The quality of the vendor's models is another important factor to consider. This includes evaluating the accuracy, reliability, and performance of the models, as well as their ability to handle different types of data and scenarios.

Integration

The vendor's ability to integrate with existing systems and workflows is also important. This includes evaluating the vendor's APIs, SDKs, and other integration tools, as well as their ability to provide custom solutions and support.

Data Privacy and Security

Data privacy and security are critical considerations when evaluating AI vendors. It is important to evaluate the vendor's approach to data privacy and security, including their compliance with relevant regulations and standards, as well as their ability to provide secure and reliable data storage and processing.

Support and Maintenance

Ongoing support and maintenance are also important factors to consider. This includes evaluating the vendor's ability to provide timely and effective support, as well as their ability to provide regular updates and improvements to their models and systems.

Best Practices for Scaling and Deploying AI

Scaling and deploying AI systems requires a strategic approach that involves several best practices, including:

Start Small and Iterate

It is important to start with small, manageable projects and iterate based on feedback and results. This approach allows for continuous improvement and refinement of the AI models and systems.

Focus on Data Quality

Data quality is crucial for the accuracy and effectiveness of AI models. It is important to invest in data preparation, cleaning, and organization to ensure that the data is accurate, consistent, and relevant.

Leverage Cloud and Edge Computing

Cloud and edge computing can provide the scalability and flexibility needed for deploying AI systems. It is important to evaluate the different cloud and edge computing options and choose the ones that best meet the specific needs and requirements of the AI systems.

Implement Robust Security Measures

Security is a critical consideration when deploying AI systems. It is important to implement robust security measures, including encryption, access controls, and monitoring, to protect the data and systems from unauthorized access and cyber threats.

Monitor and Maintain the Systems

Ongoing monitoring and maintenance are essential for ensuring the performance and reliability of AI systems. It is important to implement robust monitoring and maintenance processes, including performance tracking, drift detection, and model retraining, to ensure that the systems remain accurate and effective over time.

Operationalizing AI involves a strategic approach that includes data preparation, model development, model deployment, and model management. Integrating advanced ML tooling and establishing robust processes for model deployment and management are essential for driving tangible business outcomes. By following best practices and leveraging real-world use cases, enterprises can successfully transition from traditional analytics to production-grade machine learning systems.

Leadership takeaway: Operationalizing AI is not just an engineering challenge—it’s a strategic capability. Treat it as a product, invest in lifecycle management, and align it with measurable business outcomes from day one.

Read part 1 of the Data Leadership in Practice: Building the AI-Ready Lakehouse series here: From Fragmentation to Foundation: Building Enterprise-Scale Lakehouse Architectures

Also read: